CDH5离线安装文档

CDH5离线安装hdfs、hive、spark、Cloudera Manager 5中文文档

相关下载

相关资料

Cloudera文档汇总- 点击访问

集群配置

统一安装CentOS-7-x86_64-Minimal

/iso 目录下放有CentOS-7-x86_64-DVD-1611.iso

统一更改计算机名和hosts

node0

[root@localhost ~]# hostname node0-1-100

[root@localhost ~]# cat /etc/hostname

node0-1-100

[root@localhost ~]#

[root@localhost ~]# cat /etc/sysconfig/network

HOSTNAME=node0-1-100

[root@localhost ~]#

node1

[root@localhost ~]# hostname node1-1-101

[root@localhost ~]# cat /etc/hostname

node1-1-101

[root@localhost ~]#

[root@localhost ~]# cat /etc/sysconfig/network

HOSTNAME=node1-1-101

[root@localhost ~]#

node2

[root@localhost ~]# hostname node2-1-102

[root@localhost ~]# cat /etc/hostname

node2-1-102

[root@localhost ~]#

[root@localhost ~]# cat /etc/sysconfig/network

HOSTNAME=node2-1-102

[root@localhost ~]#

node3

[root@localhost ~]# hostname node3-1-103

[root@localhost ~]# cat /etc/hostname

node3-1-103

[root@localhost ~]#

[root@localhost ~]# cat /etc/sysconfig/network

HOSTNAME=node3-1-103

[root@localhost ~]#

hosts统一添加的内容

# cat /etc/hosts

192.168.1.150 node0-1-100

192.168.1.151 node1-1-101

192.168.1.152 node2-1-102

192.168.1.153 node3-1-103

格式化磁盘(这里的node1~3有2T+3T硬盘故需要格式化 定义3T为hadoop数据盘)

例子小于2T的:

查看分区:

[root@node1-1-101 ~]# fdisk -l

分区:

[root@node1-1-101 ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0xea043dde.

The device presents a logical sector size that is smaller than

the physical sector size. Aligning to a physical sector (or optimal

I/O) size boundary is recommended, or performance may be impacted.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-3907029167, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-3907029167, default 3907029167):

Using default value 3907029167

Partition 1 of type Linux and of size 1.8 TiB is set

Command (m for help): p

Disk /dev/sdb: 2000.4 GB, 2000398934016 bytes, 3907029168 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disk label type: dos

Disk identifier: 0xea043dde

Device Boot Start End Blocks Id System

/dev/sdb1 2048 3907029167 1953513560 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@node1-1-101 ~]#

格式化sdb1:

[root@node1-1-101 ~]# mkfs.ext4 /dev/sdb1

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

122101760 inodes, 488378390 blocks

24418919 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=2636120064

14905 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968,

102400000, 214990848

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information:

done

[root@node1-1-101 ~]#

例子大于2T的:

查看分区:

[root@node1-1-101 ~]# parted -l

分区:

[root@node2-1-102 ~]# parted /dev/sda

GNU Parted 3.1

Using /dev/sda

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) print

Model: ATA ST3000DM008-2DM1 (scsi)

Disk /dev/sda: 3001GB

Sector size (logical/physical): 512B/4096B

Partition Table: msdos

Disk Flags:

Number Start End Size Type File system Flags

(parted) mklabel gpt

Warning: The existing disk label on /dev/sda will be destroyed and all data on this disk will be

lost. Do you want to continue?

Yes/No? yes

(parted) mkpart primary xfs 0% 100%

(parted) p

Model: ATA ST3000DM008-2DM1 (scsi)

Disk /dev/sda: 3001GB

Sector size (logical/physical): 512B/4096B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1049kB 3001GB 3001GB ext4 primary

(parted) quit

Information: You may need to update /etc/fstab.

[root@node2-1-102 ~]#

格式化sda1:

[root@node2-1-102 ~]# mkfs.xfs -f /dev/sda1

meta-data=/dev/sda1 isize=512 agcount=4, agsize=183141568 blks

= sectsz=4096 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=732566272, imaxpct=5

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=357698, version=2

= sectsz=4096 sunit=1 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@node2-1-102 ~]#

开机自动挂载:

[root@node1-1-101 ~]# mkdir /hdata2 /hdata1

[root@node1-1-101 ~]# vi /etc/fstab

编辑添加的内容如下(2T以下为ext4 以上为xfs 我们测试环境全部使用的xfs):

/dev/sdb1 /hdata2 ext4 defaults 0 0

/dev/sda1 /hdata1 xfs defaults 0 0

[root@node2-1-102 ~]# mount -a

[root@node2-1-102 ~]#

ps:这里 mount -a 没成功之前 不能重启否则需要去机房。

使用CentOS-7-x86_64-DVD-1611.iso 里边的包为yum源

获得所需文件:

[root@node3-1-103 ~]# mkdir -p /opt/yum_src

[root@node3-1-103 ~]# mount /iso/CentOS-7-x86_64-DVD-1611.iso /mnt

mount: /dev/loop0 is write-protected, mounting read-only

[root@node3-1-103 ~]# cp -r /mnt/Packages /opt/yum_src/

[root@node3-1-103 ~]# cp -r /mnt/repodata /opt/yum_src/

[root@node3-1-103 ~]# umount /mnt/

[root@node3-1-103 ~]# mv /iso/CentOS-7-x86_64-DVD-1611.iso /opt/yum_src/

[root@node3-1-103 ~]# rm /iso -rf

[root@node3-1-103 ~]#

配置yum源:

[root@node2-1-102 ~]# cp -r /etc/yum.repos.d /etc/yum.repos.d.old

[root@node2-1-102 ~]# rm -f /etc/yum.repos.d/*

[root@node2-1-102 ~]# vi /etc/yum.repos.d/local.repo

[root@node2-1-102 ~]# cat /etc/yum.repos.d/local.repo

[base]

name=CentOS-$releasever - Base

baseurl=file:///opt/yum_src/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-7

安装ntp服务器

安装

使用yum安装即可: yum -y install ntpdate ntp

配置

只需要一台配置ntp server即可 其他使用crontab进行定时同步

// 配置时间服务器

# vim /etc/ntp.conf

# 禁止所有机器连接ntp服务器

restrict default ignore

# 允许局域网内的所有机器连接ntp服务器

restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

# 使用本机作为时间服务器

server 127.127.1.0

// 启动ntp服务器

# service ntpd start

// 设置ntp服务器开机自动启动

# chkconfig ntpd on

使用

集群其它节点通过执行crontab定时任务,每天在指定时间向ntp服务器进行时间同步,方法如下:

// 切换root用户

$ su root

// 执行定时任务,每天00:00向服务器同步时间,并写入日志

# crontab -e配置每天同步即可

00 * * * * ntpdate node0-1-100&&clock -w

// 查看任务

# crontab -l

安装常用的rpm包和先决条件

root@node2-1-102 ~]# yum install -y lrzsz unzip zip psmisc perl python-lxml rpcbind.x86_64

ssh无密码登录

安装:

yum -y install openssh-clients

配置:

主节点输入命令ssh-keygen,一直回车即可

[root@hadoop-bbb .ssh]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/aaa/id_rsa.

Your public key has been saved in /root/aaa/id_rsa.pub.

The key fingerprint is:

f8:e3:e7:c5:58:9a:4c:36:57:c1:0d:a3:5e:ff:ac:07 root@hadoop-bbb

The key's randomart image is:

+--[ RSA 2048]----+

| .+o |

| ..o.|

| . o |

| . . o . |

| . S + + .|

| . + O E..|

| o = o .o|

| . ... ..|

| .o. .. |

+-----------------+

[root@hadoop-bbb ~]#

然后把/root/.ssh/ 复制到其他的机器即可

[root@cq-t-h-node0-0-150 hadoop]# scp -r /root/.ssh root@cq-t-h-node1-0-151:/root

The authenticity of host 'cq-t-h-node1-0-151 (192.168.0.151)' can't be established.

ECDSA key fingerprint is c0:21:32:a8:2f:a1:25:0f:29:7a:61:77:32:ab:40:04.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'cq-t-h-node1-0-151,192.168.0.151' (ECDSA) to the list of known hosts.

id_rsa 100% 1679 1.6KB/s 00:00

authorized_keys 100% 407 0.4KB/s 00:00

[root@cq-t-h-node0-0-150 hadoop]# scp -r /root/.ssh root@cq-t-h-node2-0-152:/root

The authenticity of host 'cq-t-h-node2-0-152 (192.168.0.152)' can't be established.

ECDSA key fingerprint is b7:26:f6:5a:5c:20:b2:b8:1a:8b:b4:52:8b:43:04:68.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'cq-t-h-node2-0-152,192.168.0.152' (ECDSA) to the list of known hosts.

id_rsa 100% 1679 1.6KB/s 00:00

authorized_keys 100% 407 0.4KB/s 00:00

[root@cq-t-h-node0-0-150 hadoop]# scp -r /root/.ssh root@cq-t-h-node3-0-153:/root

The authenticity of host 'cq-t-h-node3-0-153 (192.168.0.153)' can't be established.

ECDSA key fingerprint is 61:c2:c7:78:34:ce:90:fc:cc:81:77:36:7a:18:e5:60.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'cq-t-h-node3-0-153,192.168.0.153' (ECDSA) to the list of known hosts.

id_rsa 100% 1679 1.6KB/s 00:00

authorized_keys 100% 407 0.4KB/s 00:00

[root@cq-t-h-node0-0-150 hadoop]#

最后使用ssh登录到node1~3即可

[root@cq-t-h-node0-0-150 ~]$ ssh root@cq-t-h-node1-0-151

The authenticity of host 'cq-t-h-node1-0-151 (192.168.0.151)' can't be established.

ECDSA key fingerprint is c0:21:32:a8:2f:a1:25:0f:29:7a:61:77:32:ab:40:04.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'cq-t-h-node1-0-151,192.168.0.151' (ECDSA) to the list of known hosts.

Last login: Wed May 24 14:24:49 2017

[root@cq-t-h-node1-0-151 ~]$ exit

logout

Connection to cq-t-h-node1-0-151 closed.

[root@cq-t-h-node0-0-150 ~]$ ssh root@cq-t-h-node2-0-152

The authenticity of host 'cq-t-h-node2-0-152 (192.168.0.152)' can't be established.

ECDSA key fingerprint is b7:26:f6:5a:5c:20:b2:b8:1a:8b:b4:52:8b:43:04:68.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'cq-t-h-node2-0-152,192.168.0.152' (ECDSA) to the list of known hosts.

Last login: Wed May 24 14:19:42 2017

[root@cq-t-h-node2-0-152 ~]$ exit

logout

Connection to cq-t-h-node2-0-152 closed.

[root@cq-t-h-node0-0-150 ~]$ ssh root@cq-t-h-node3-0-153

The authenticity of host 'cq-t-h-node3-0-153 (192.168.0.153)' can't be established.

ECDSA key fingerprint is 61:c2:c7:78:34:ce:90:fc:cc:81:77:36:7a:18:e5:60.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'cq-t-h-node3-0-153,192.168.0.153' (ECDSA) to the list of known hosts.

Last login: Wed May 24 14:19:40 2017

[root@cq-t-h-node3-0-153 ~]$ exit

logout

Connection to cq-t-h-node3-0-153 closed.

[root@cq-t-h-node0-0-150 ~]$

node0 主节点安装postgresql

postgresql这里采用的是yum安装:

yum install postgresql-server -y

基础配置:

使用mkdir创建数据目录

mkdir /opt/postgres

使用chown赋予postgres /opt/postgres权限

chown postgres.postgres /opt/postgres

初始化数据库

sudo -u postgres initdb /opt/postgres

配置用户可以远程登录且密码类型为md5

vi /opt/postgres/pg_hba.conf

添加一条数据配置所有用户可以通过192.168.0.0/24内网访问且密码加密为md5

host all all 192.168.0.0/24 md5

vi /opt/postgres/postgresql.conf

监听改为0.0.0.0

listen_addresses = '0.0.0.0'

启动: sudo -u postgres pg_ctl start -D /opt/postgres

但还是建议切换到postgres用户使用start -D /opt/postgres 启动

创建用户hadoop和数据库并赋予权限和配置密码hadoop:

bash-4.2$ psql

psql (9.2.18)

Type "help" for help.

postgres=# create user hadoop;

CREATE ROLE

postgres=# create database hive;

CREATE DATABASE

postgres=# create database cm;

CREATE DATABASE

postgres=# \password hadoop;

Enter new password:

Enter it again:

postgres=# grant all ON DATABASE hive TO hadoop;

GRANT

postgres=# grant all ON DATABASE cm TO hadoop;

GRANT

postgres=#

ps:必须在postgres 用户下进行使用su postgres 命令切换用户

停止: sudo -u postgres pg_ctl stop -D /opt/postgres

但还是建议切换到postgres用户使用pg_ctl stop -D /opt/postgres 启动

安装CM5

所有节点创建所需目录:

[hadoop@cq-t-h-node0-0-150 ~]$ mkdir -p /opt/cloudera/parcel-repo /usr/local/cm /usr/local/java /opt/logs

[hadoop@cq-t-h-node0-0-150 ~]$

上传文件到主节点:

jdk-8u112-linux-x64.tar.gz 放在 /usr/local/src下

cloudera-manager-centos7-cm5.9.2_x86_64.tar.gz 放在 /usr/local/src下

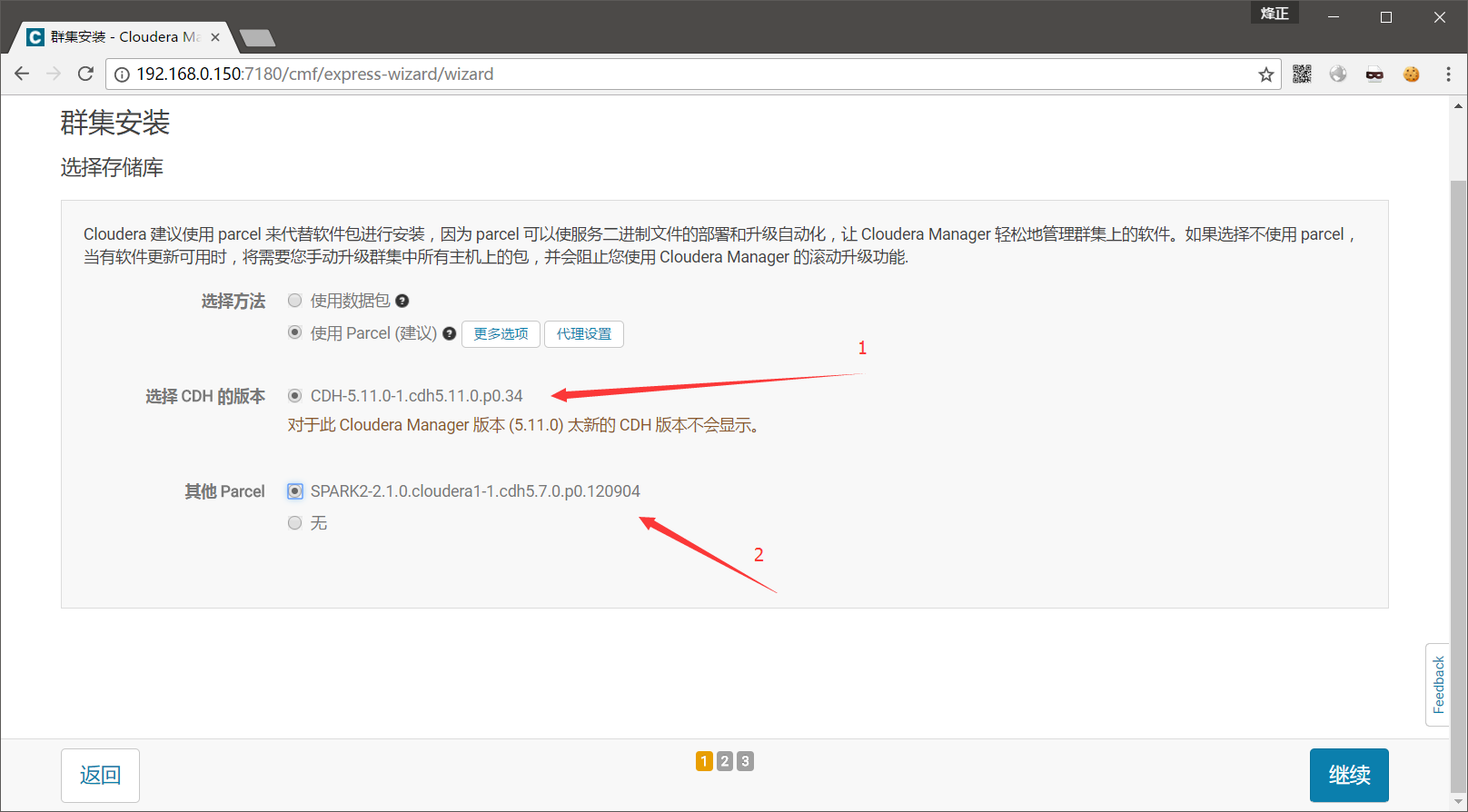

CDH-5.11.0-1.cdh5.11.0.p0.34-el7.parcel 放在 /opt/cloudera/parcel-repo下

CDH-5.11.0-1.cdh5.11.0.p0.34-el7.parcel.sha 放在 /opt/cloudera/parcel-repo下

SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el7.parcel 放在 /opt/cloudera/parcel-repo下

SPARK2-2.1.0.cloudera1-1.cdh5.7.0.p0.120904-el7.parcel.sha 放在 /opt/cloudera/parcel-repo下

登录主节点解压cloudera-manager-centos7-cm5.9.2_x86_64.tar.gz 和jdk-8u112-linux-x64.tar.gz 文件并移动到相应位置:

[root@cq-t-h-node0-0-150 src]# tar -zxf cloudera-manager-centos7-cm5.11.0_x86_64.tar.gz

[root@cq-t-h-node0-0-150 src]# tar -zxf jdk-8u112-linux-x64.tar.gz

[root@cq-t-h-node0-0-150 src]#

[root@cq-t-h-node0-0-150 src]# mv cm-5.11.0 /usr/local/cm

[root@cq-t-h-node0-0-150 src]# mv jdk1.8.0_112 /usr/local/java

做cm 和java 的软连接方便以后在线升级:

[root@cq-t-h-node0-0-150 src]# cd /usr/local/java/

[root@cq-t-h-node0-0-150 java]# ln -snf jdk1.8.0_112 java

[root@cq-t-h-node0-0-150 java]# cd ..

[root@cq-t-h-node0-0-150 local]# cd cm/

[root@cq-t-h-node0-0-150 cm]# ln -snf cm-5.11.0 cm

[root@cq-t-h-node0-0-150 cm]# ll /usr/local/cm

total 0

lrwxrwxrwx 1 root root 9 May 24 14:37 cm -> cm-5.11.0

drwxr-xr-x 9 1106 4001 88 Apr 12 17:15 cm-5.11.0

[root@cq-t-h-node0-0-150 cm]# ll /usr/local/java

total 0

lrwxrwxrwx 1 root root 12 May 24 14:36 java -> jdk1.8.0_112

drwxr-xr-x 8 10 143 255 Sep 23 2016 jdk1.8.0_112

[root@cq-t-h-node0-0-150 cm]#

配置cm agent所需配置:

[root@cq-t-h-node0-0-150 cm]# vi /usr/local/cm/cm/etc/cloudera-scm-agent/config.ini

[root@cq-t-h-node0-0-150 cm]# cat /usr/local/cm/cm/etc/cloudera-scm-agent/config.ini |grep server_host

server_host=cq-t-h-node0-0-150

[root@cq-t-h-node0-0-150 cm]#

将jdk 和cm 使用scp命令分发到所有节点:

[root@cq-t-h-node0-0-150 cm]# scp -q -r /usr/local/cm/cm-5.11.0 root@cq-t-h-node1-0-151:/usr/local/cm

[root@cq-t-h-node0-0-150 cm]# scp -q -r /usr/local/cm/cm-5.11.0 root@cq-t-h-node2-0-152:/usr/local/cm

[root@cq-t-h-node0-0-150 cm]# scp -q -r /usr/local/cm/cm-5.11.0 root@cq-t-h-node3-0-153:/usr/local/cm

[root@cq-t-h-node0-0-150 cm]# scp -q -r /usr/local/java/jdk1.8.0_112 root@cq-t-h-node3-0-153:/usr/local/java

[root@cq-t-h-node0-0-150 cm]# scp -q -r /usr/local/java/jdk1.8.0_112 root@cq-t-h-node2-0-152:/usr/local/java

[root@cq-t-h-node0-0-150 cm]# scp -q -r /usr/local/java/jdk1.8.0_112 root@cq-t-h-node1-0-151:/usr/local/java

[root@cq-t-h-node0-0-150 cm]#

其他节点配置cm 和java的软链接同上:

cd /usr/local/java/

ln -snf jdk1.8.0_112 java

cd ..

cd cm/

ln -snf cm-5.11.0 cm

ll /usr/local/cm

ll /usr/local/java

/etc/profile添加如下内容指定javahome等等:

export JAVA_HOME=/usr/local/java/java

export JRE_HOME=/usr/local/java/java/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$PATH

在所有节点创建cloudera-scm用户

useradd --system --home=/usr/local/cm/cm/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

启动CM server 和CM agent: node0 cm server初始化数据库: /usr/local/cm/cm/share/cmf/schema/scm_prepare_database.sh postgresql cm hadoop hadoop

node0 cm server启动:

mkdir /var/lib/cloudera-scm-server

/usr/local/cm/cm/etc/init.d/cloudera-scm-server start

node1~3 cm agent启动:

/usr/local/cm/cm/etc/init.d/cloudera-scm-agent start

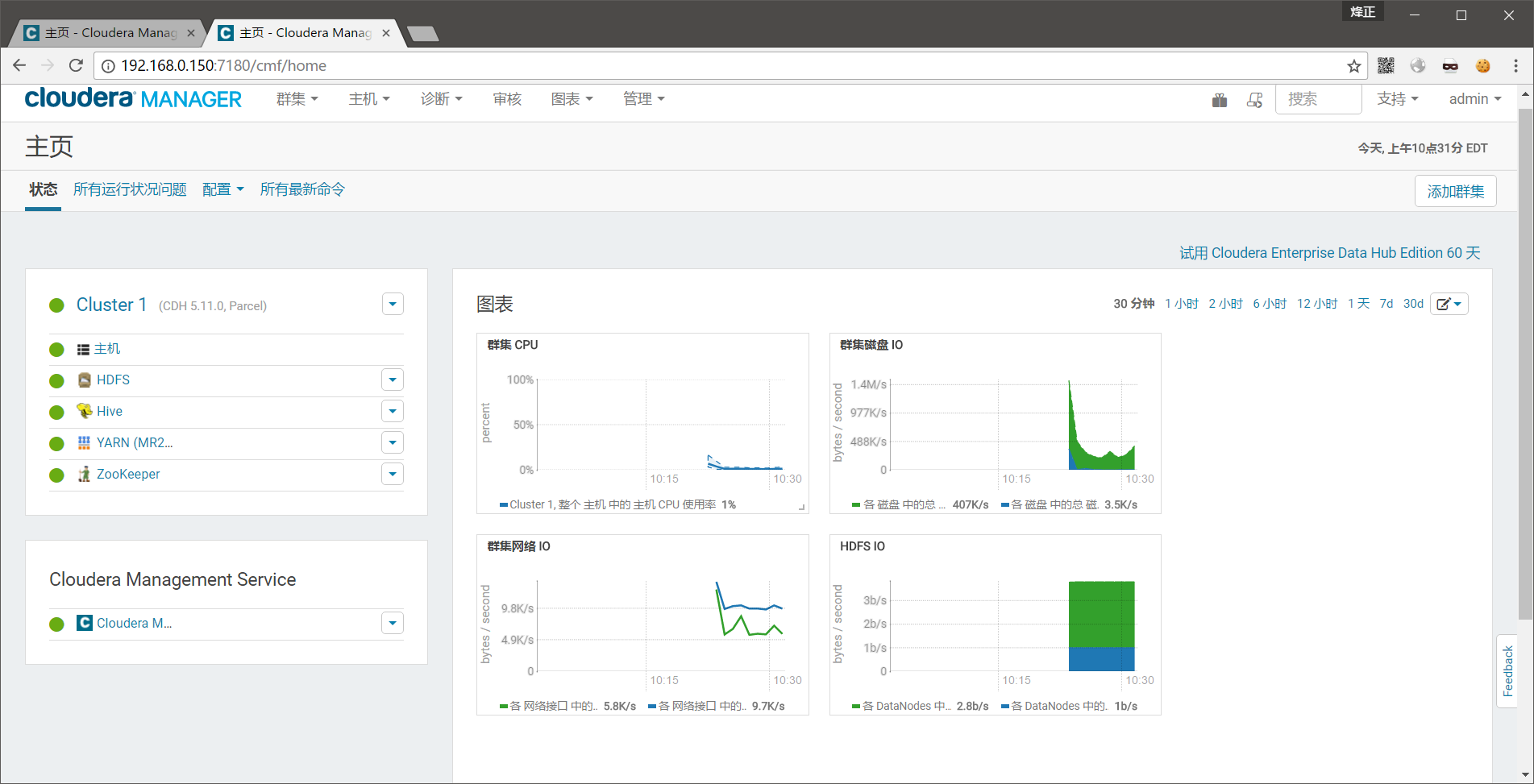

界面化操作:

使用用户名:admin 密码:admin 登录CM平台

同意服务条款

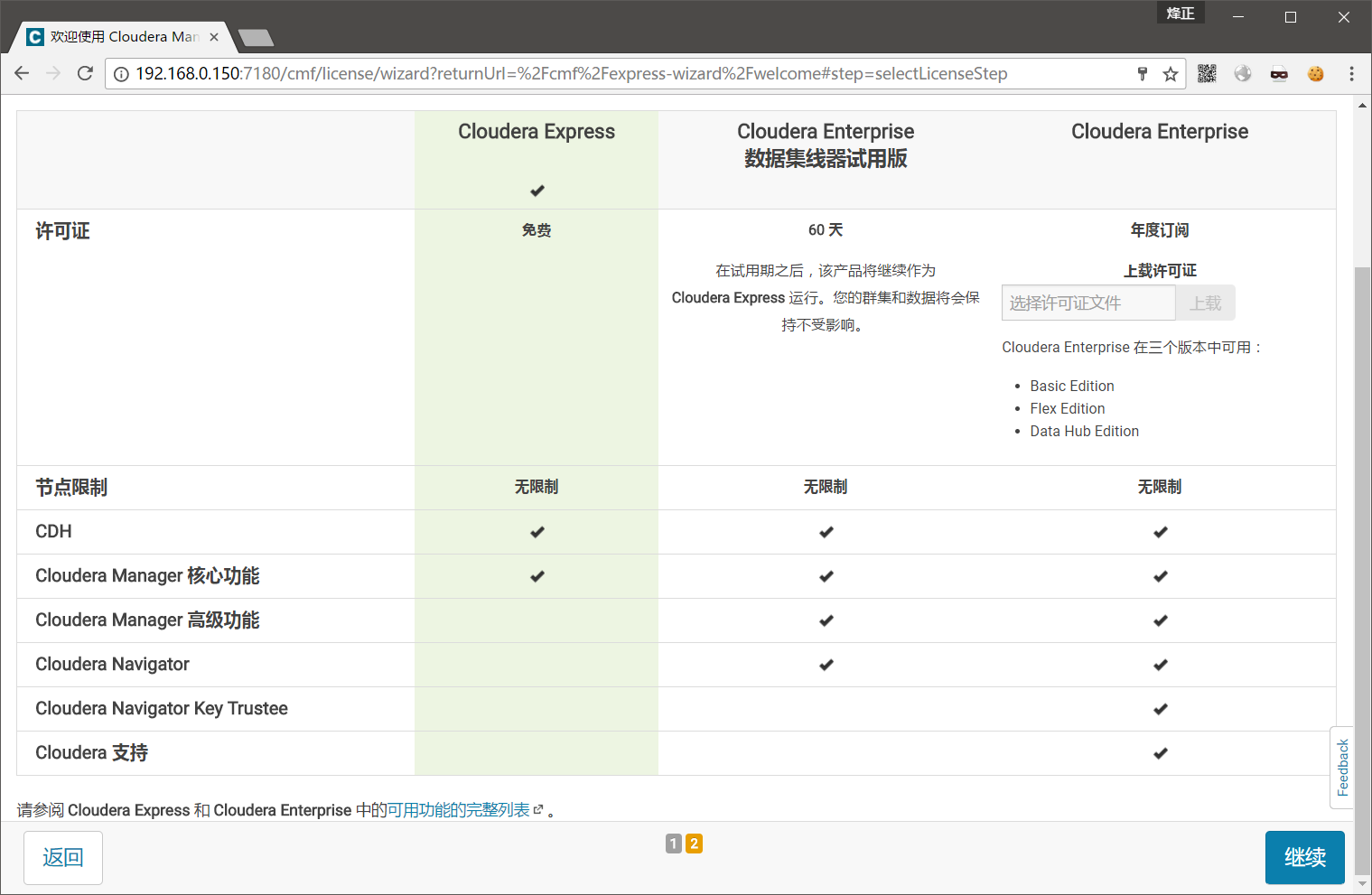

选择免费版进行安装

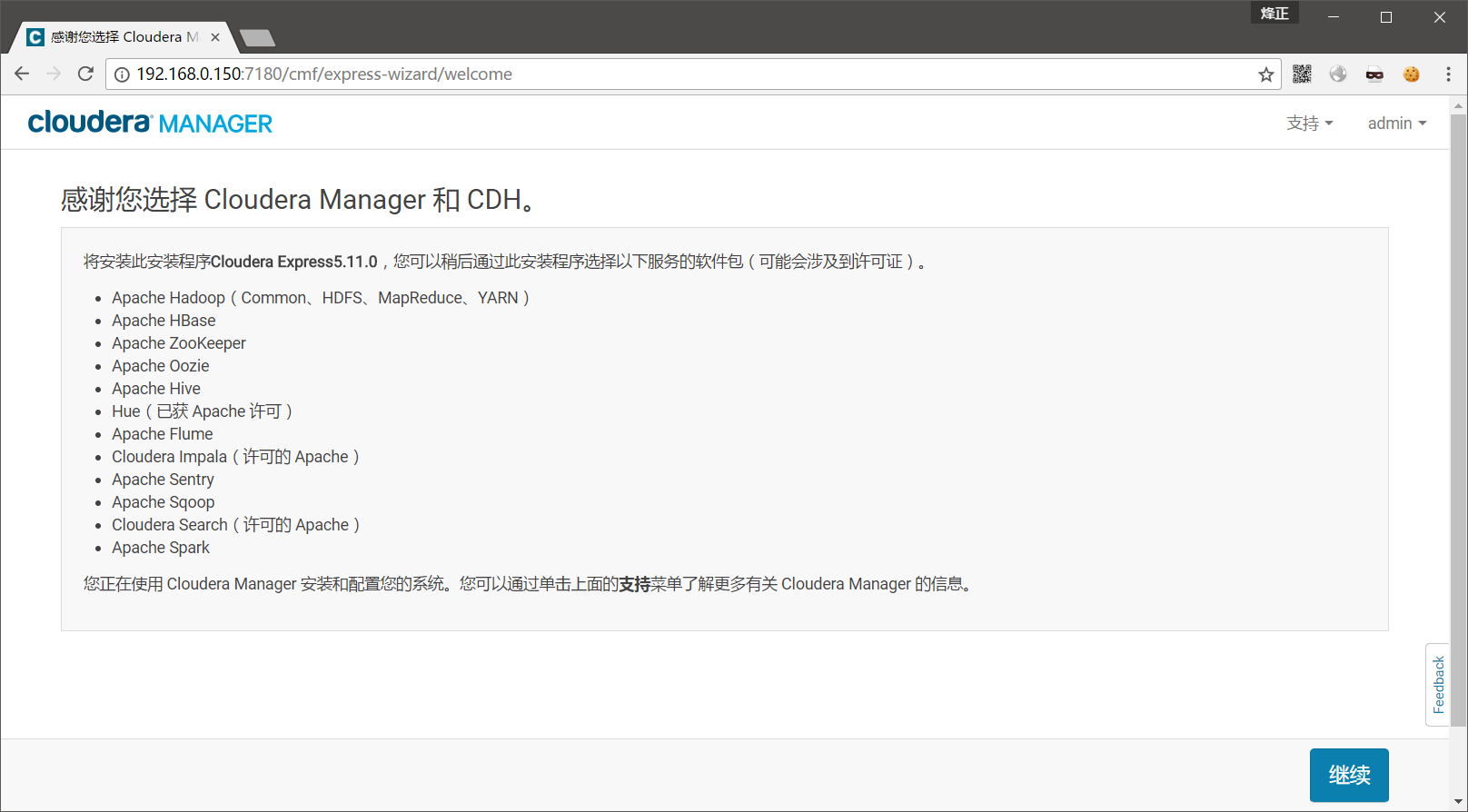

直接继续

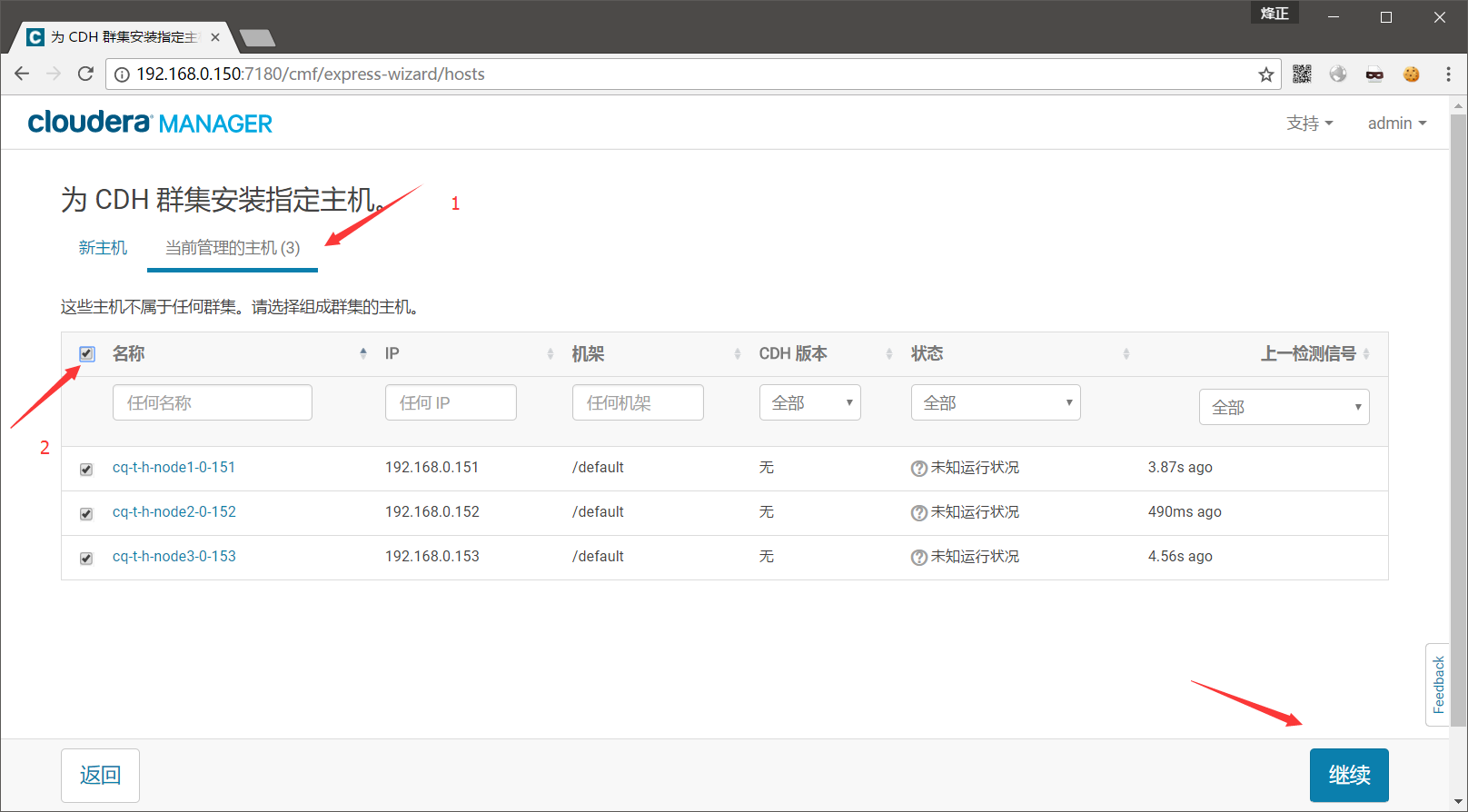

选择安装集群的主机继续下一步

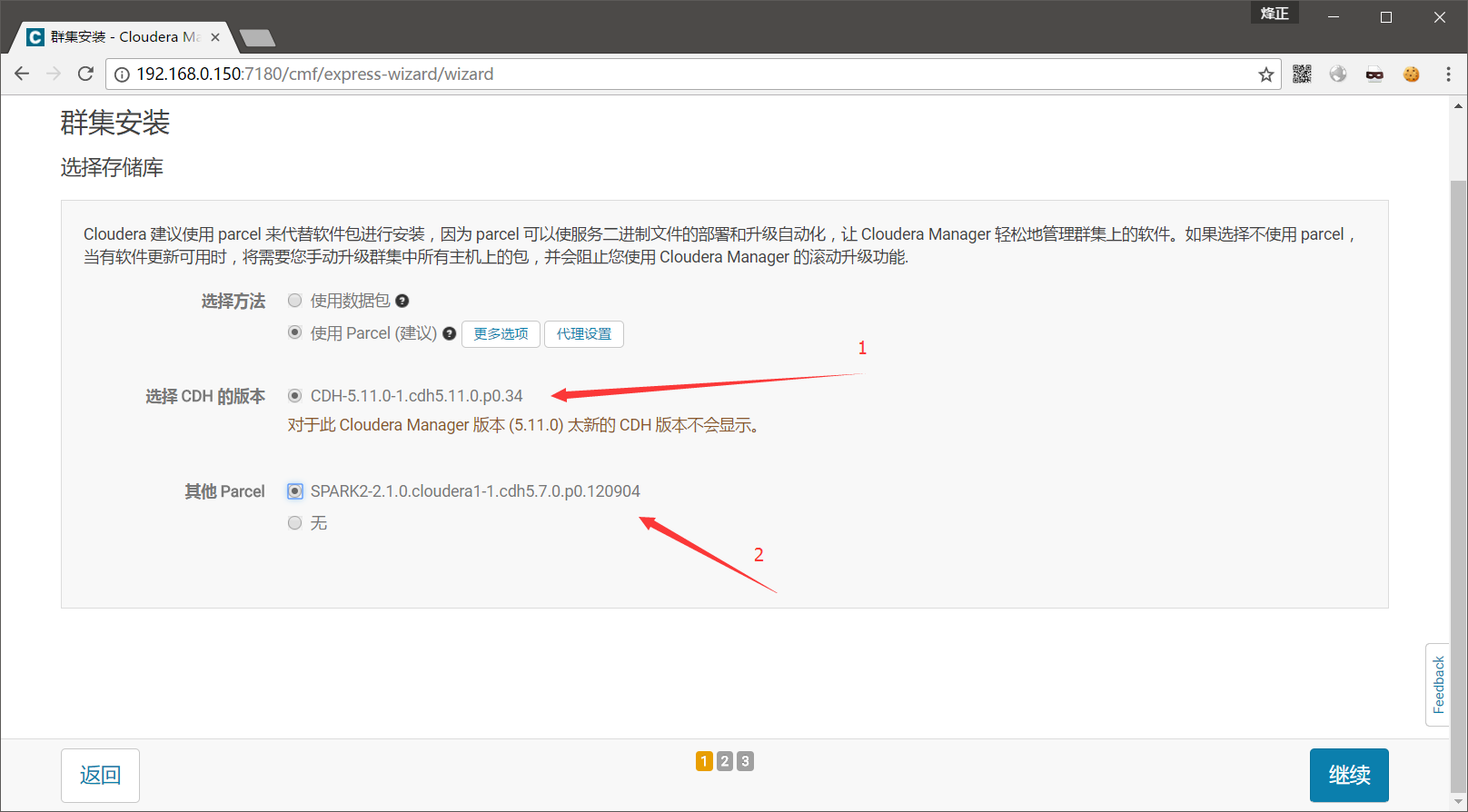

重新执行之前的步骤这里显示出CDH和spark2 版本 点击选择并继续

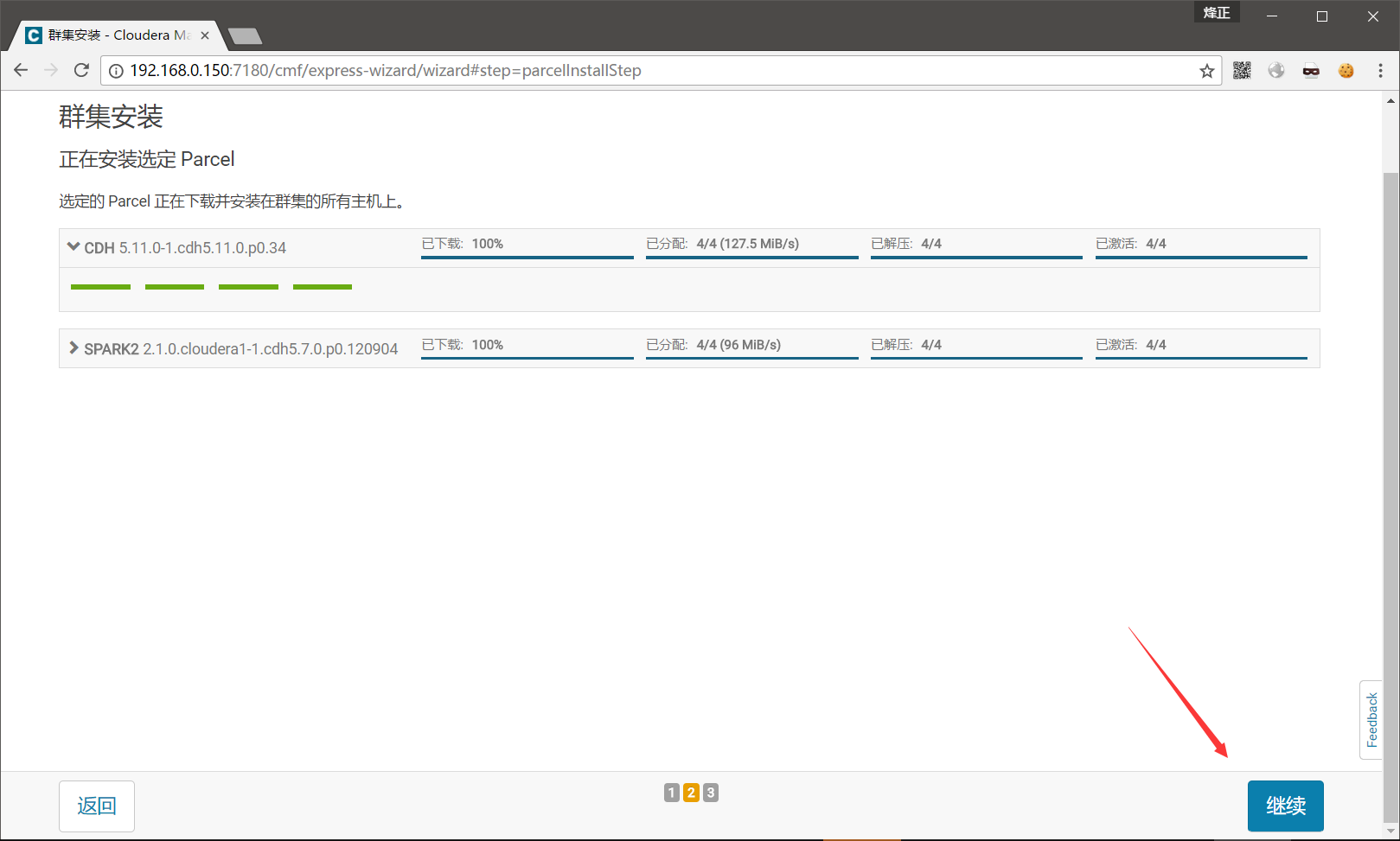

然后集群安装点击继续

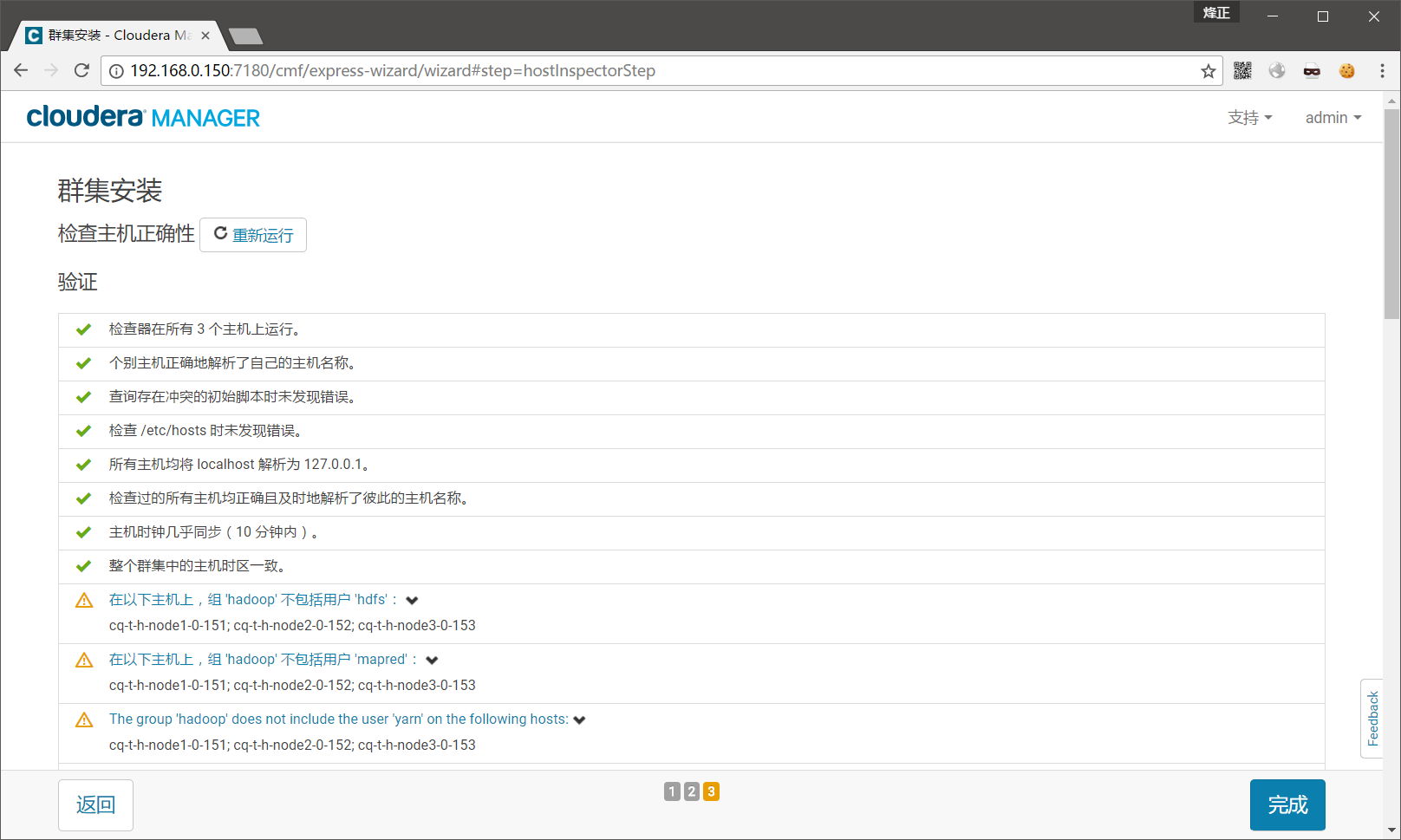

按照要求解决问题后点击重新运行直到所有都解决然后点击继续

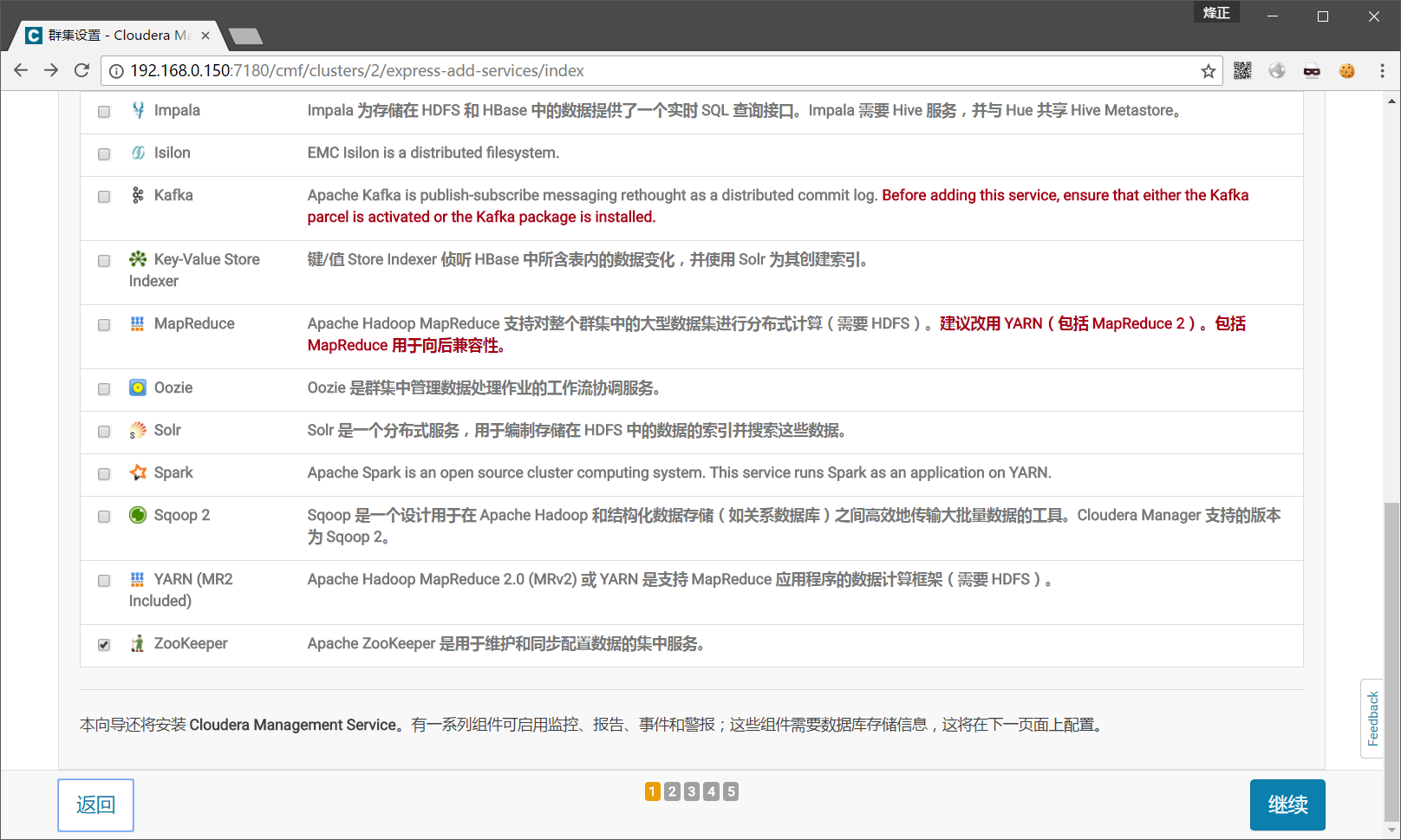

选择要安装的服务

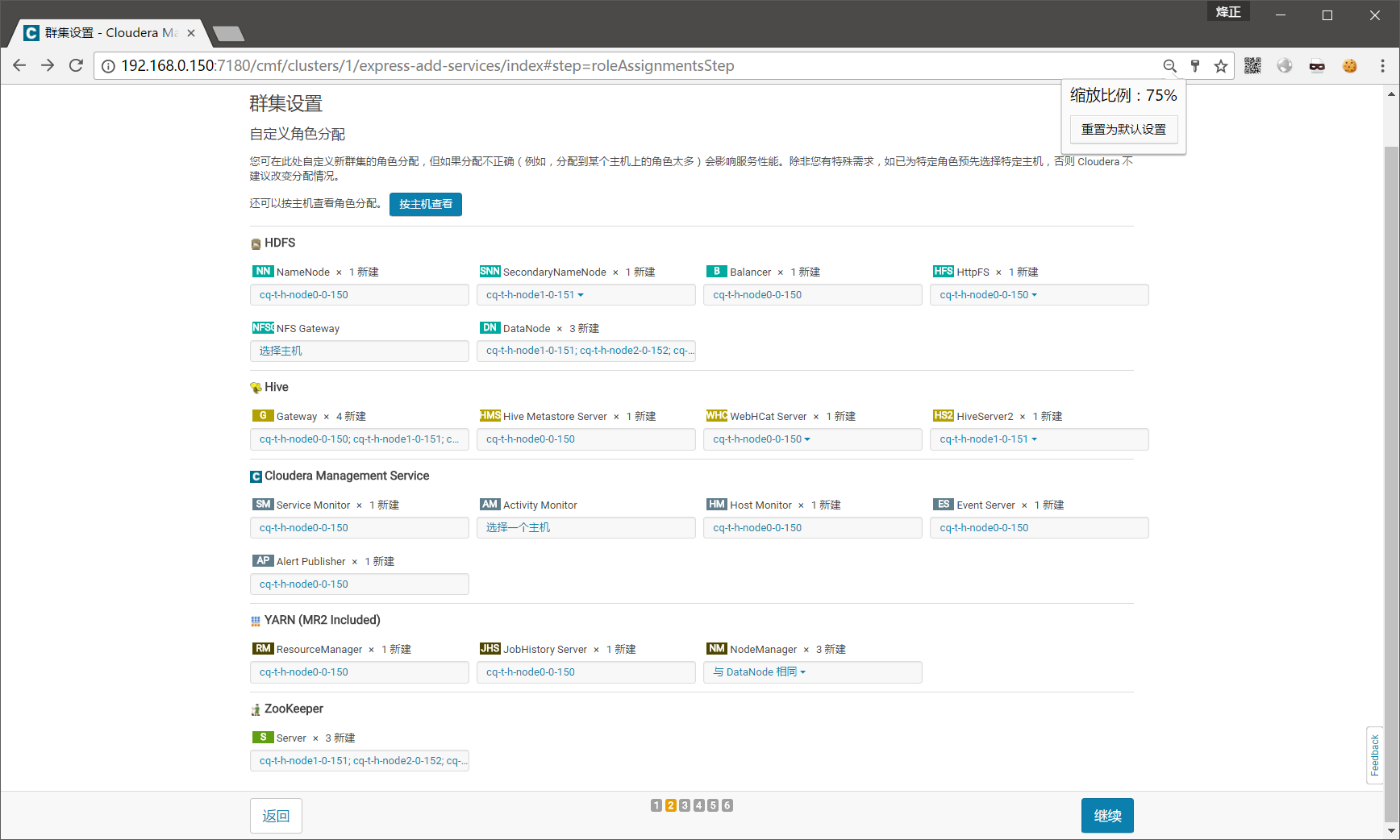

选择角色的分配

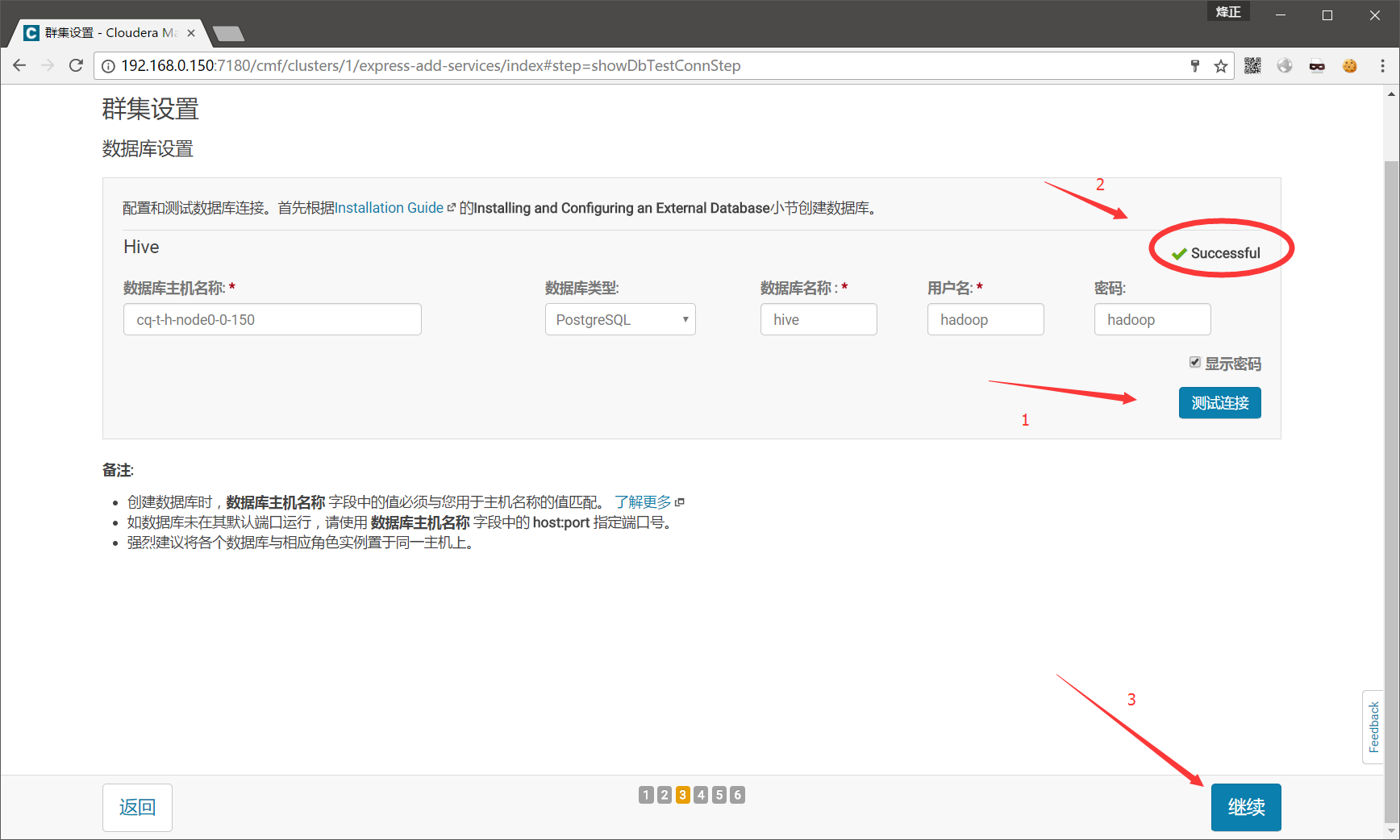

配置数据库

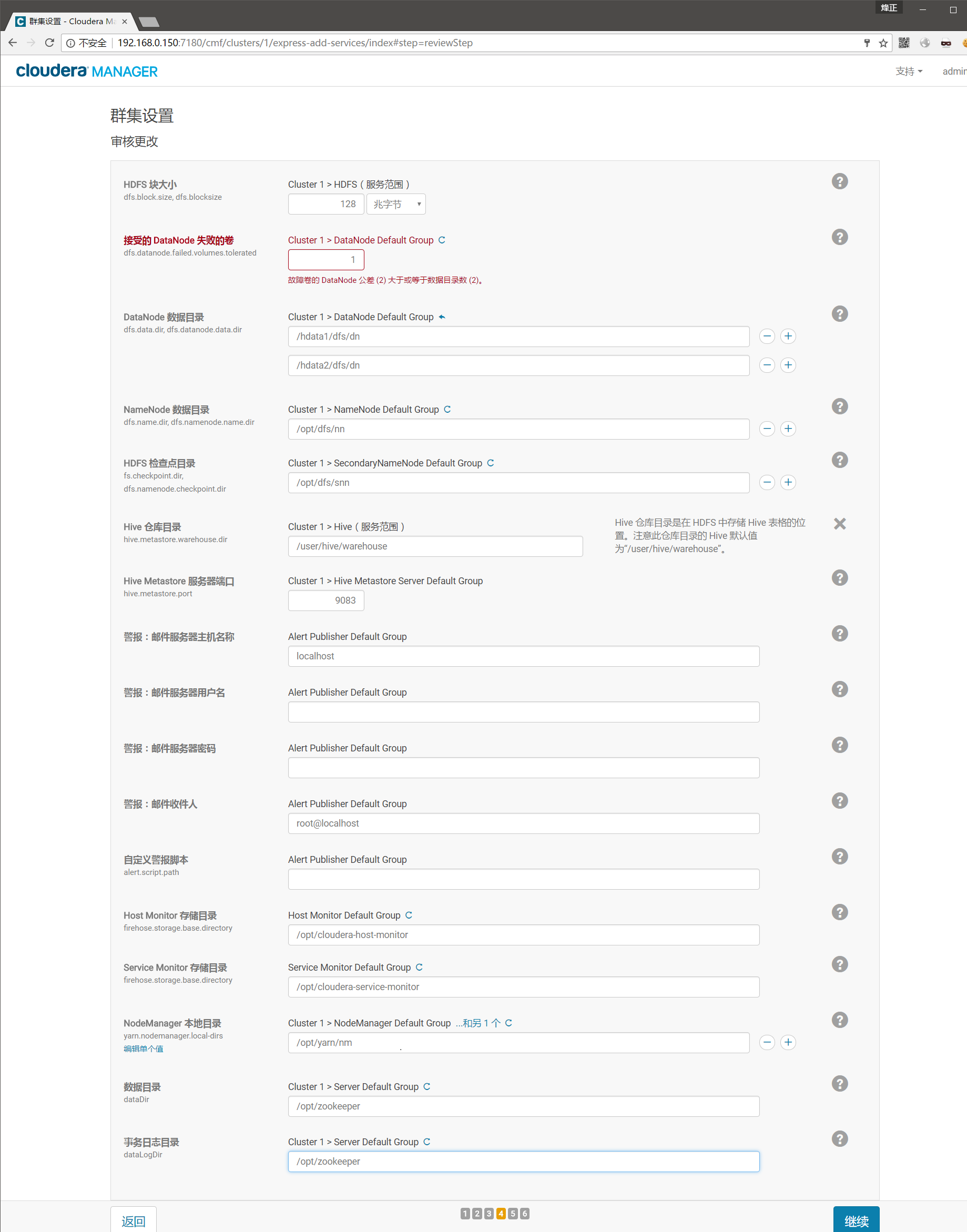

配置hdfs zookeeper hive等

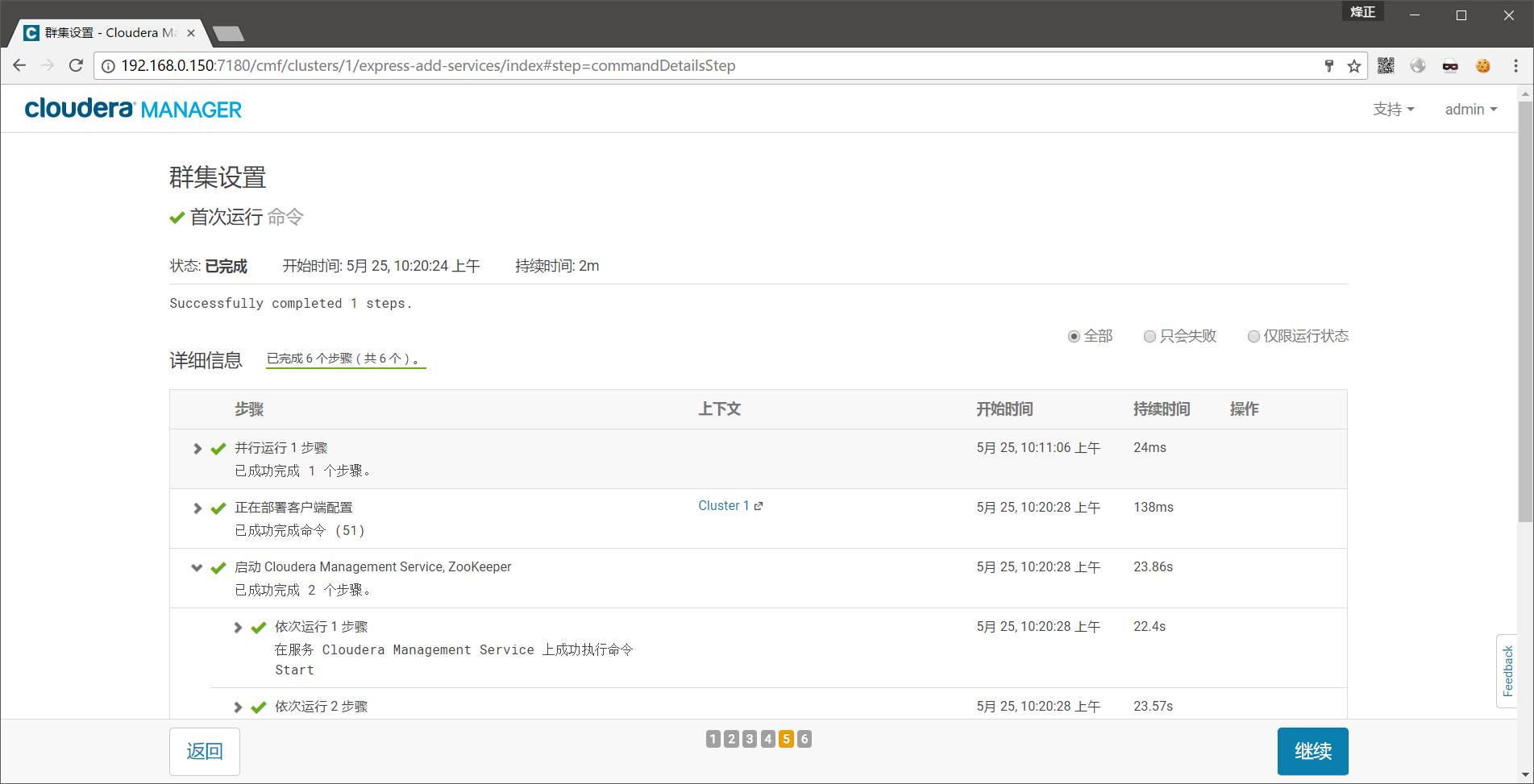

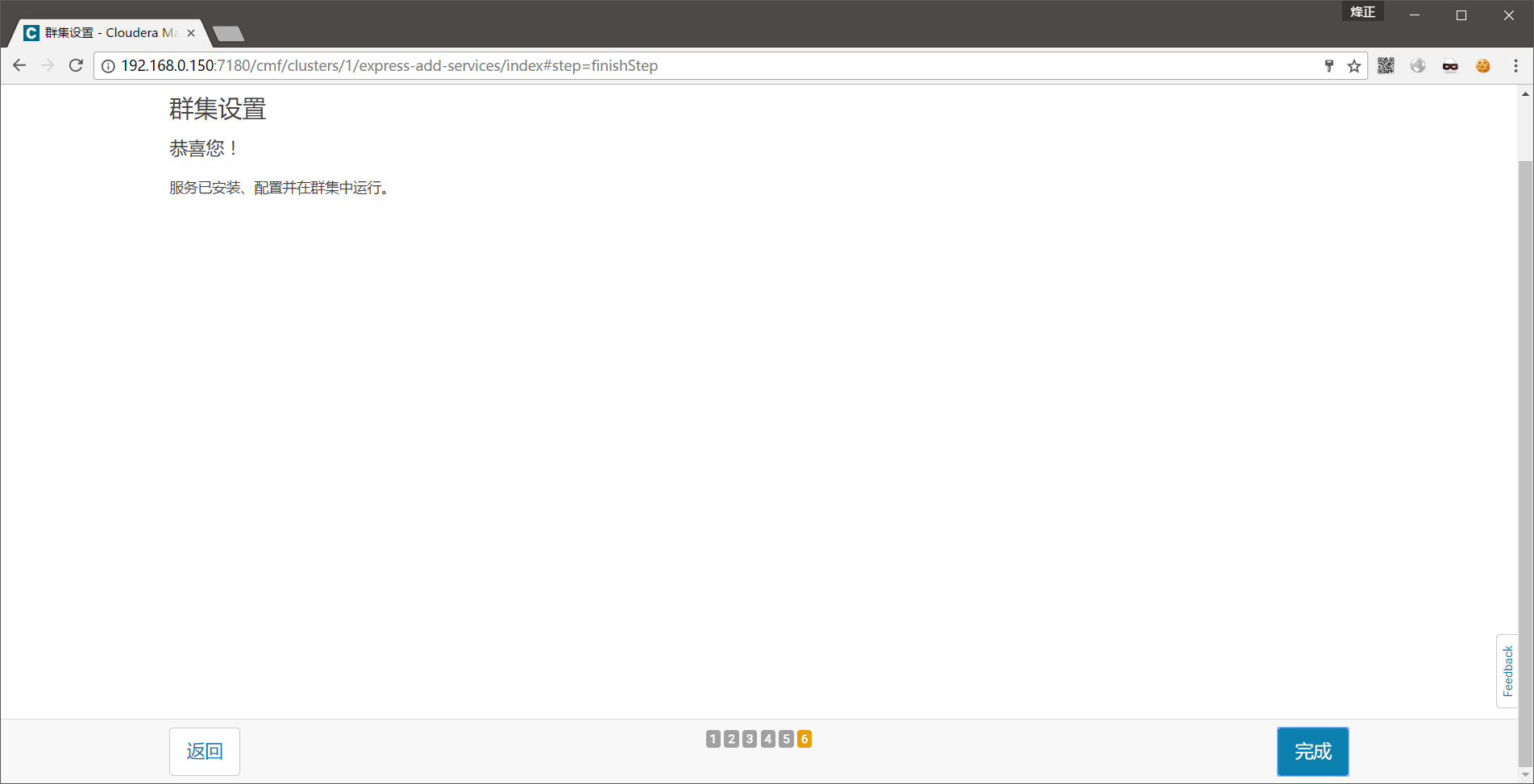

集群安装完成继续即可

安装完成

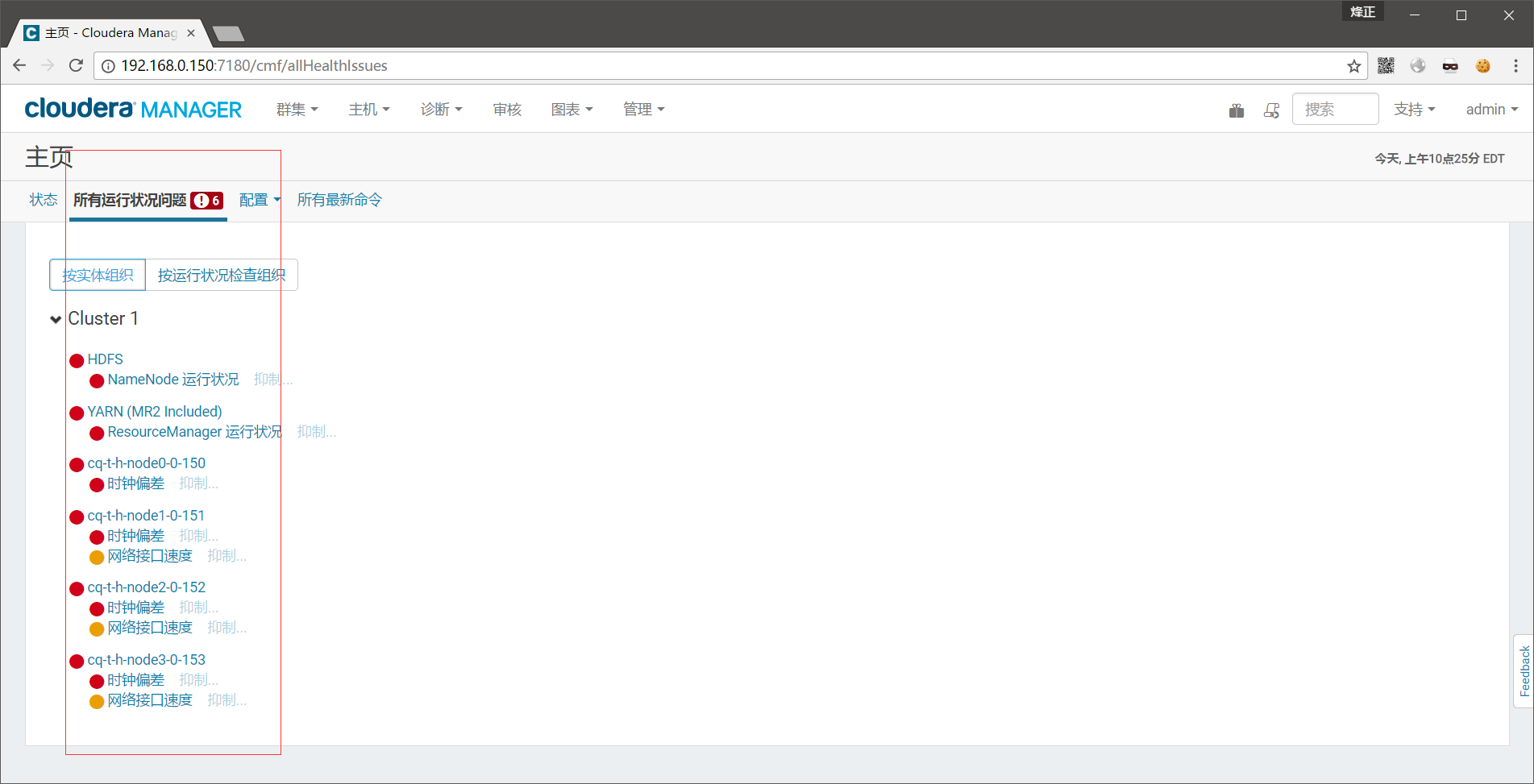

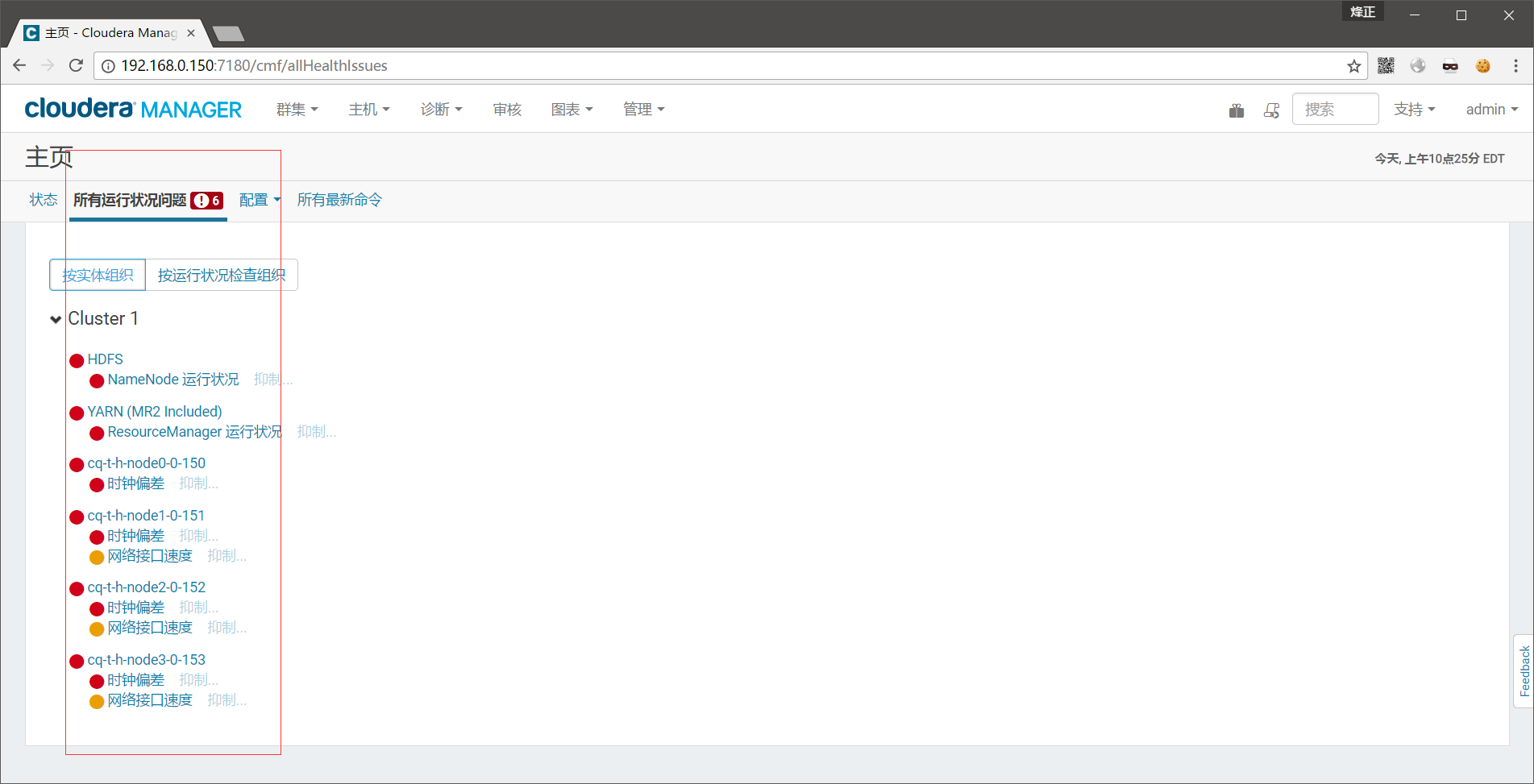

解决出现的问题(时钟偏差 和网卡 是可以直接抑制)

抑制了时钟和网卡就可以了

配置spark2以下为apache-spark2.1.1安装:

上传spark-2.1.1-bin-hadoop2.6.tgz 到src并解压放在/usr/local/spark下并做好软连接:

[root@cq-t-h-node0-0-150 conf]# ll /usr/local/spark/

total 0

lrwxrwxrwx 1 root root 25 May 25 15:06 spark -> spark-2.1.1-bin-hadoop2.6

drwxr-xr-x 12 500 500 193 May 25 15:16 spark-2.1.1-bin-hadoop2.6

[root@cq-t-h-node0-0-150 conf]#

配置如下主要做了hive的软连接以及指定了hadoop目录yarn目录和classpath:

[root@cq-t-h-node0-0-150 conf]# cat spark-env.sh|grep -v ^#

export HADOOP_CONF_DIR=/etc/hadoop/conf

export YARN_CONF_DIR=/etc/hadoop/conf

export SPARK_DIST_CLASSPATH=$(hadoop classpath)

[root@cq-t-h-node0-0-150 conf]# ll hive-site.xml

lrwxrwxrwx 1 root root 28 May 25 15:21 hive-site.xml -> /etc/hive/conf/hive-site.xml

[root@cq-t-h-node0-0-150 conf]#

配置命令简写

ln -snf /usr/local/spark/spark/bin/spark-sql /usr/bin/ssql

ln -snf /usr/local/spark/spark/bin/spark-shell /usr/bin/sshell

ln -snf /usr/local/spark/spark/bin/pyspark /usr/bin/spy

ln -snf /usr/local/spark/spark/bin/spark-submit /usr/bin/ssubmit

添加环境变量

export SPARK_HOME=/usr/local/spark/spark

配置相关服务开机启动

主服务器编辑文件/etc/rc.local添加如下内容

source /etc/profile

sudo -u postgres pg_ctl start -D /opt/postgres

/usr/local/cm/cm/etc/init.d/cloudera-scm-agent start

/usr/local/cm/cm/etc/init.d/cloudera-scm-server start

其他服务器编辑文件/etc/rc.local添加如下内容

source /etc/profile

/usr/local/cm/cm/etc/init.d/cloudera-scm-agent start

相关注意事项

注意事项1:

格式化硬盘后配置自动启动,切记需要mount -a 确定是否挂载成功,不然重启后挂载磁盘出现问题将无法正常开机需要到机房手动配置。

*注意事项2:

自定义Parcel 存储库后需要重启 server 和 agent

注意事项3:

/usr/local/cm/cm/etc/init.d/cloudera-scm-server: line 109: pstree: command not found

解决办法:*

yum -y install psmisc

注意事项4:

这个原因是因为我们重装之前使用hadoop用户启动导致的。。删除Agent节点的UUID rm -rf /opt/cm-5.4.7/lib/cloudera-scm-agent/* 重新启动即可

注意事项5:

ERROR WebServerImpl:com.cloudera.server.web.cmf.search.components.SearchRepositoryManager: The server storage directory [/var/lib/cloudera-scm-server] doesn't exist.

解决办法:

mkdir /var/lib/cloudera-scm-server

注意事项6:

重新安装还会出现:

已启用透明大页面压缩,可能会导致重大性能问题。请运行“echo never > /sys/kernel/mm/transparent_hugepage/defrag”和“echo never > /sys/kernel/mm/transparent_hugepage/enabled”以禁用此设置,然后将同一命令添加到 /etc/rc.local 等初始化脚本中,以便在系统重启时予以设置。以下主机将受到影响:

原因:

centos 7 文件/etc/rc.d/rc.local 没有执行权限

解决办法:

chmod 777 /etc/rc.d/rc.local

注意事项7:

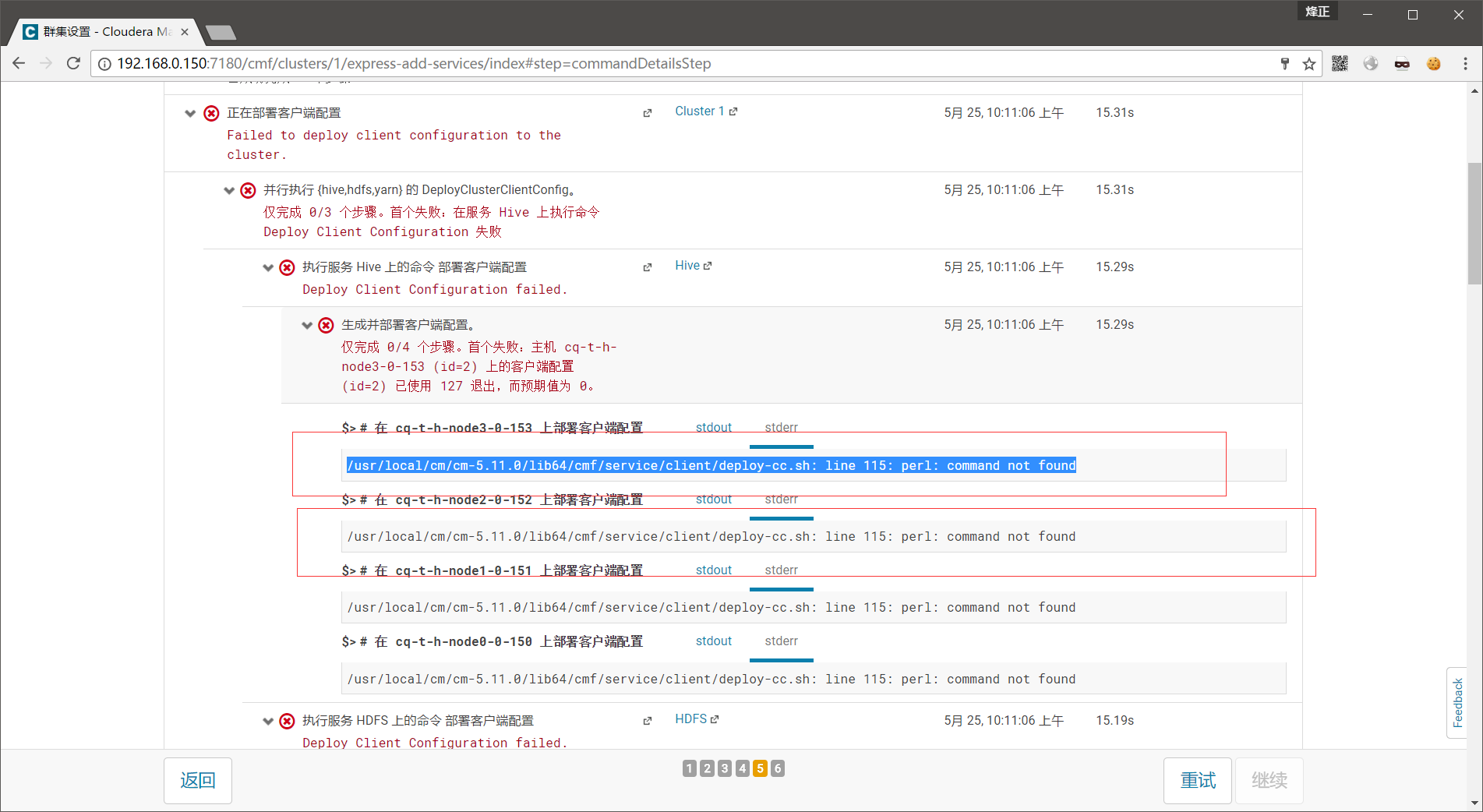

安装hive出现一下问题

/usr/local/cm/cm-5.11.0/lib64/cmf/service/client/deploy-cc.sh: line 115: perl: command not found

解决办法:

yum install -y perl

注意事项8:

时钟偏差实际上我们使用ntp时钟

注意事项9:

当主机名出现末尾空格,或者以数字开头,安装集群的时候将出现无法扫描到节点主机

知识点:

集群HDF balancer:

Hadoop的HDFS集群非常容易出现机器与机器之间磁盘利用率不平衡的情况,比如集群中添加新的数据节点。当HDFS出现不平衡状况的时候,将引发很多问题,比如MR程序无法很好地利用本地计算的优势,机器之间无法达到更好的网络带宽使用率,机器磁盘无法利用等等。可见,保证HDFS中的数据平衡是非常重要的。 在Hadoop中,包含一个Balancer程序,通过运行这个程序,可以使得HDFS集群达到一个平衡的状态,使用这个程序的命令如下:

sh $HADOOP_HOME/bin/start-balancer.sh –t 10%

HDFS NFS Gateway:

HDFS NFS Gateway能够把HDFS挂载到客户机上作为本地文件系统来管理,支持NFSv3。当前版本的NFS Gateway有如下可用特性。

1.用户在支持NFSv3的操作系统上可以通过本地文件系统浏览HDFS。

2.使用NFS Gateway 用户能够直接下载和上传HDFS文件到本地文件系统中。

3.用户可以通过挂载点直接传输数据流至HDFS,但只能增量添加不能随机写数据。

链接地址:http://blog.csdn.net/rzliuwei/article/details/38388279